I love a well documented programming library. It invites you right in to join the party. Accessible documentation really helps to live up to the term "library" - a place of public discovery where you can (sometimes literally) choose your own adventure. Not long ago, I came across the Face Recognition library for Python. It's a fun little tool for finding and comparing faces in an image, and its documentation is excellent. There are gifs and pictures to go along with explanations of each feature. The authors walk you through a variety of use cases, including what's possible when you combine the Face Recognition library with other Python modules.

The overall effect of top-notch documentation like this is that users immediately want to experiment with all of the library's cool features - and they're given a clear idea of how to do so. In my case I wanted to create a web app that uses the Face Recognition library on the backend in order to recognize the faces that users pass in on the client side as image URLs. Because the Python library requires image files rather than URLs I would get to practice creating and manipulating files with Python. I would also need to find a way to take the information that the Face Recognition library provides and use it to put a name tag on each face on the frontend.

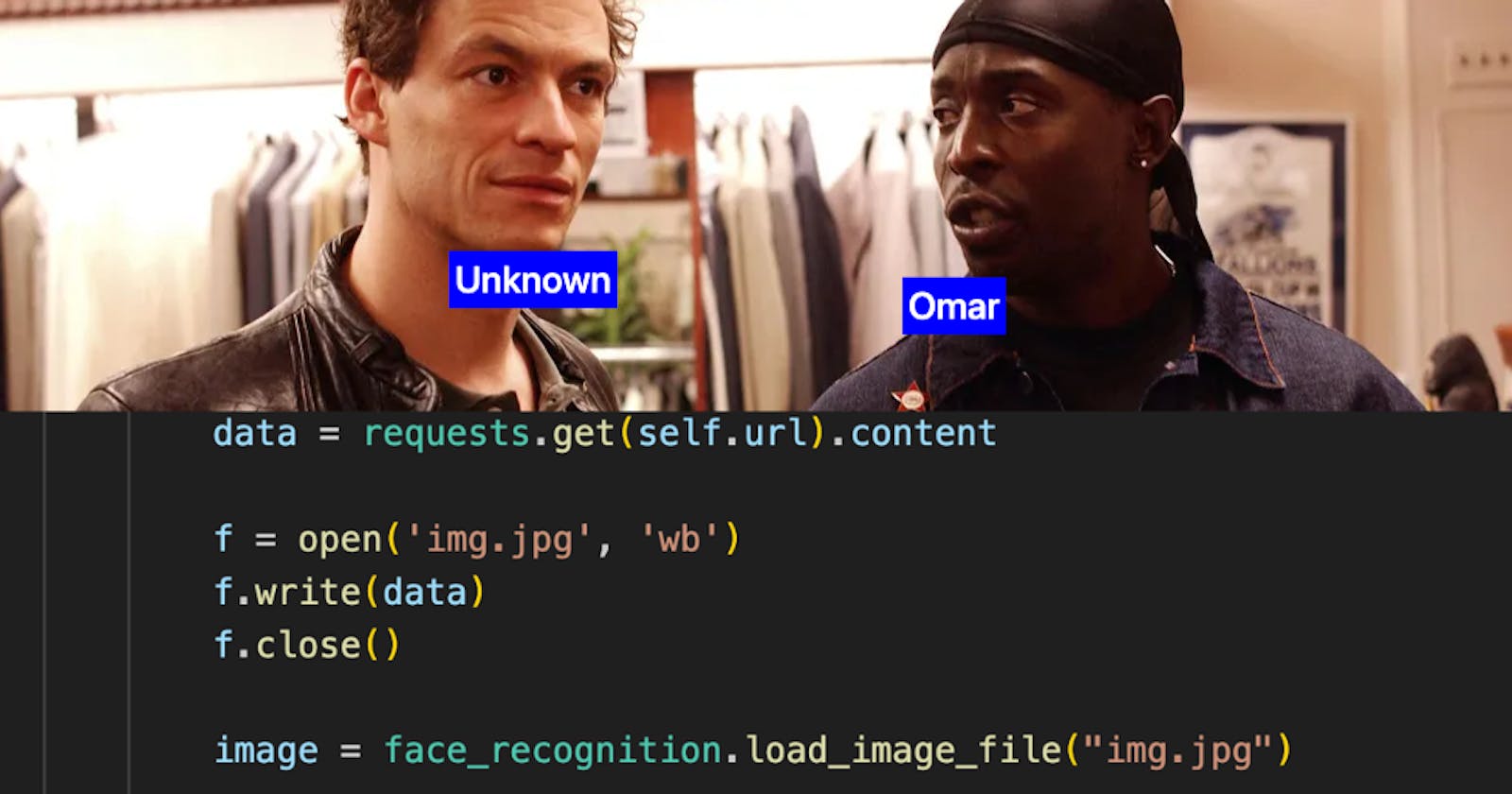

First off, I'm using a Flask backend and React frontend for this project. And while there is plenty of client-side code involved, I want to focus mostly on the backend in this post, since that's where all of the interesting libraries and troubleshooting could be found. The most relevant piece of info from the frontend is the format of the data I need to send there from the server. I wanted to be able to render an image with a name tag for each face in the image. Here's how it could look:

To do this, I need the backend to process the image, compare it to any "known images," and then return an array of objects, one for each face in the image. Any faces that don't match with a name are labeled "Unknown" (No offense Detective McNulty) and the rest get names. Each object will have pixel coordinates to locate the face as well:

{"data": [

{"name": "Unknown",

"coordinates": [81,442,236,287]

},

{"name": "Omar",

"coordinates": [98,734,253,580]

}

]}

Now the the desired output is clear, it's time to design the database schema and logic that would power our backend routes. In addition to the URL of the image to be scanned for images, users will also need to create a login profile that they can populate with images matched to faces for the people they know. I created a straightforward User schema, with usernames, hashed passwords, and a many-to-one relationship for all the images with they could submit to show the app who they know:

class User(db.Model, SerializerMixin):

__tablename__ = 'users'

id = db.Column(db.Integer, primary_key=True)

username = db.Column(db.Integer, unique=True, nullable=False)

_password_hash = db.Column(db.String)

images = db.relationship('Image', back_populates='user', cascade='all')

The images models store both the URL for that image and the person's name as strings. The first hurdle for using the Face Recognition library was to turn the user's image URL into a file that the library could work with. This is right in Python's wheelhouse. The requests library gives us an easy way to access the content of the URL which we can then write to a file:

A file named "img.jpg" will then be created in the same folder as the file executing these commands. We can then use this image for all of our facial recognition needs and delete it when we're done. I'll use this technique whenever we need to process an image using its URL.

We're ready to start using the tools built into the Face Recognition library. The first thing we'll do is scan the URL provided by the user to get the pixel coordinates for each face in the image. This will return an array containing another array of four integers for each face. They are called pixel coordinates because each integer represents a boundary for the rectangle that contains the face in the following order: top, right, bottom, left. These numbers will be helpful for comparing faces, for putting the name tags on the image in the frontend, and even for validating images passed by the user - if the array returned is empty, that means there were no faces in the image. I'll go into further detail about validating user input in a future post.

I decided to put the process of creating the coordinate arrays for an image URL into its own function called getCoordinates():

class Url:

def __init__(self, url):

self.url = url

def getCoordinates(self):

data = requests.get(self.url).content

f = open('img.jpg', 'wb')

f.write(data)

f.close()

image = face_recognition.load_image_file("img.jpg")

os.remove('img.jpg')

return face_recognition.face_locations(image)

I take the URL provided from the user, create a temporary image file, use Face Recognition's .load_image method, delete the temporary file, and then in the last line I ask Face Recognition to find and return the face locations. That's the beauty of using libraries like this one - a lot of the messy details, such as how to locate faces and return pixel coordinates - are abstracted away. All we need to do is read the documentation and tinker a bit in order to understand what to provide and what gets returned.

With the image coordinates in hand, the next step of the process was to compare each of these images to the images in the user's database. To do this, I'd need to take each array of coordinates and create a separate image file with just that face. Note that before this code I saved the user's image as "bigPicture.jpg."

#get the coordinates of the faces

coordinates = self.getCoordinates()

# iterate through each face, as defined by the coordinates

for index, coordinate in enumerate(coordinates):

top = coordinate[0]

right = coordinate[1]

bottom = coordinate[2]

left = coordinate[3]

foundMatch = False

#temporarily save an image of that face

with Image.open('bigPicture.jpg') as im:

im.crop((left, top, right, bottom)).save('target.jpg')

unknown_image = face_recognition.load_image_file('target.jpg')

os.remove('target.jpg')

Eagle-eyed observers will see that the order of pixel coordinates returned by the Face Recognition library is different that that used by my Image objects, which is imported from Pillow, a great image processing library. Pillow's Image objects make it easy to crop and save images, as seen above. It took a few minutes of fiddling to get the pixel coordinates figured out, but I'd be lying if I told you I didn't enjoy solving littlemysteries like this.

Once I have the faces from the user's image in their own files, all I need to do is compare them to the faces we know in the database. The Face Recognition library loads each face image and then creates encodings to make comparisons, so I start by loading all the known faces the user has uploaded to get them ready for encoding:

#get the user's image objects from db

image_objects = User.query.filter_by(id=user_id).first().images

em_encodings =[]

for im_ob in image_objects:

dbData = requests.get(im_ob.url).content

f = open('dbImage.jpg', 'wb')

f.write(dbData)

f.close()

em_encodings.append({

"enc":face_recognition.load_image_file('dbImage.jpg'),

"name": im_ob.name

})

The last and largest piece of the puzzle is to iterate over each of the unknown faces and look for a match with the encodings from the database. The code below is executed for each face that needs to be identified:

#iterate through the known image encodings and look for a match

for encoding in em_encodings:

if face_recognition.face_encodings(encoding["enc"]) and face_recognition.face_encodings(unknown_image):

known_enc = face_recognition.face_encodings(encoding["enc"])[0]

unknown_enc = face_recognition.face_encodings(unknown_image)[0]

results = face_recognition.compare_faces([known_enc], unknown_enc, tolerance=tolerance)

if results[0]:

res.append({"name": encoding['name'], "coordinates": coordinate})

foundMatch = True

break

if not foundMatch:

res.append({"name": "Unknown", "coordinates": coordinate})

The encodings for each known face are compared to those from the unknown face for each iteration. If there is a match, the name and coordinates are appended to our result variable, res. If no match is found, we pass "Unknown" as the name for that group of coordinates. Once the iterations are done we delete the temporary image files and return res. This sends the name and pixel coordinates back to the frontend so the images can be rendered.

Example backend response:

{"data": [

{ "name": "Ron",

"coordinates": [110,497,239,368]

},

{ "name": "Harry",

"coordinates": [354,497,483,368]

},

{ "name": "Hermoine",

"coordinates": [569,497,698,368]

}

]}

Resulting image when the frontend uses that info to display a name for each face:

Learning new libraries and getting all the little wires and tubes to connect between one module and another is one of my favorite parts of programming. Each step of the process had its own little victories: getting that first array of coordinates, successfully cropping an image by rearranging the pixel coordinates into the correct order for Pillow, and eventually seeing the names show up in the frontend. If it was easy, it wouldn't be nearly as much fun, so get out there and experiment with whatever libraries catch your interest.